Wrong at the Speed of AI: Hallucinations and Regulators, Why Both Provide Insight into Organizational Risk and AI

- By:

- George T. Tziahanas |

- January 23, 2024 |

- minute read

There have been plenty of articles written about potential threats from AI, with some even concerned that humanity’s very existence is at stake. Last year, hundreds of technology leaders and researchers penned an open letter stating: “Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.” And while extinction is certainly a bad outcome, most organizations face more pressing and likely risks from AI.

Hallucinations and regulators present two of the most likely organizational risks associated with AI for most organizations. While AI hype and broad-based availability of Large Language Models (LLM’s) and tools such as ChatGPT is just entering its second year, instances of hallucinations and commentary from regulators indicate where risks lie. Understanding regulatory intent, and how hallucinations can play into their focus areas, is critical to use AI while mitigating its potential risk.

Wrong at the Speed of AI

.png?width=500&height=350&name=wrong%20at%20the%20speed%20of%20AI%20(1).png) Hallucinations are instances where AI provides inaccurate or fabricated results. One New York attorney gained international notoriety last year, when submitting a motion related to a very discrete legal issue (the tolling effect of bankruptcy under the Montreal Convention for Airlines). A significant amount of the motion was generated by ChatGPT, which included several completely fabricated case citations, and inaccurate analysis of applicable (and fake) case-law. The attorneys were ultimately sanctioned for submitting erroneous information and failing to supervise the technology.

Hallucinations are instances where AI provides inaccurate or fabricated results. One New York attorney gained international notoriety last year, when submitting a motion related to a very discrete legal issue (the tolling effect of bankruptcy under the Montreal Convention for Airlines). A significant amount of the motion was generated by ChatGPT, which included several completely fabricated case citations, and inaccurate analysis of applicable (and fake) case-law. The attorneys were ultimately sanctioned for submitting erroneous information and failing to supervise the technology.

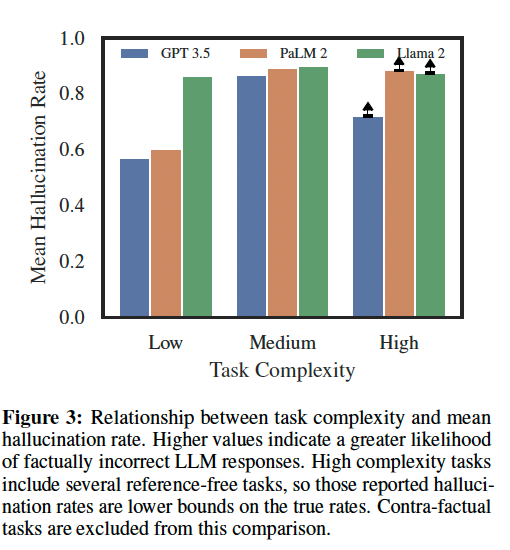

And while the Avianca case noted above was a good practical lesson, a pre-print of a comprehensive study was published showing hallucinations are broad-based across a range of legal research and analysis tasks. [Dahl, et. al. Large Legal Fictions: Profiling Hallucinations in Large Language Models. arXiv:2401.01301v1 [cs.CL] 2 Jan 2024.] This study is incredibly important, as it represents a complete, methodologically sound test for the presence of hallucinations with common tasks. They ranged from simple queries such as: “is {case} a real case?”, to complex queries such as “What is the central holding in {case}”

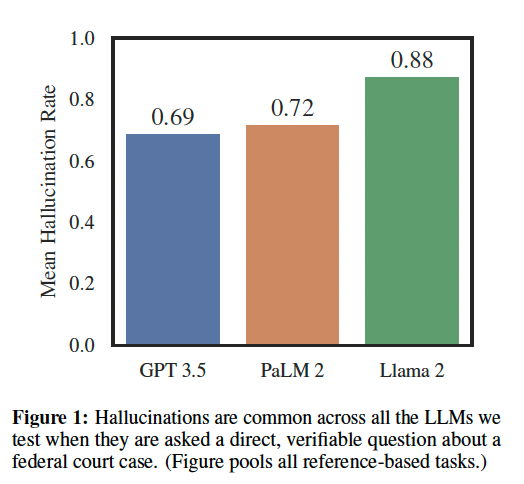

The results showed that the AI platforms hallucinated between 69-88% of the time (Figure 1 below).

Even the simple tasks, such as verifying a case-citation or author of an opinion, had hallucination rates over 50%!

These results are very important for organizations to understand, as they represent a proxy for similar tasks outside the legal domain. There are several takeaways from the Avianca case and this more recent study:

- AI can fabricate information while making it seem authentic; and can do so on a frequent basis.

- Analysis, generated content, and decisions based on hallucinations are a demonstrated, credible risk.

- The training sets (in this case foundational models largely comprised of publicly available Internet content) are critical to understand in relation to (in)accuracy in results for given tasks.

- Supervision and QA of results generated from AI is needed where high levels of accuracy are required.

The frequency of hallucinations in the study also provides a roadmap for regulators and goes to the heart of previous commentary on their concerns related to the use of AI.

Regulators are not Hallucinating

.png?width=400&height=280&name=wrong%20at%20the%20speed%20of%20AI%20(2).png) Last year we saw a few early regulatory actions on AI; notably when Italy temporarily banned ChatGPT over privacy concerns, and the EU Data Protection Board launched a dedicated taskforce to coordinate potential enforcement actions against ChatGPT. However, one of the most telling was a joint statement on “Enforcement Efforts Against Discrimination and Bias in Automated Systems,” issued by the Bureau of Consumer and Financial Protection (CFPB), the U.S. Department of Justice (DOJ), the U.S. Equal Employment Opportunity Commission (EEOC), and the Federal Trade Commission (FTC).

Last year we saw a few early regulatory actions on AI; notably when Italy temporarily banned ChatGPT over privacy concerns, and the EU Data Protection Board launched a dedicated taskforce to coordinate potential enforcement actions against ChatGPT. However, one of the most telling was a joint statement on “Enforcement Efforts Against Discrimination and Bias in Automated Systems,” issued by the Bureau of Consumer and Financial Protection (CFPB), the U.S. Department of Justice (DOJ), the U.S. Equal Employment Opportunity Commission (EEOC), and the Federal Trade Commission (FTC).

First, the fact all these agencies issued a joint statement on AI is telling on its own. Second, the agencies have taken a position that they have necessary authority from existing statutes and regulations and are not waiting on any new AI legislation. Third, the areas in scope are quite broad, and may impact a large number of AI, analytics, and automation applications. In particular, the statement notes:

Although many of these tools offer the promise of advance, their use also has the potential to perpetuate unlawful bias, unlawful discrimination, and produce other harmful outcomes.

Joint Statement, cited above - (CFPB) (DOJ)(EEOC)(FTC)

They go a step further, and identify sources of potential problems, which include:

- Data and Datasets

- Model Opacity and Access

- System Design and Use

The Joint Statement’s focus on these sources makes sense, since AI, analytics, and automation solutions are a construct of all three; and issues with any of these can have a negative outcome.

There is also an important inference that can be drawn from the underlying regulations and guidance referenced in the Joint Statement; the elements that go into design, model development, deployment and operations of AI solutions are likely business records subject to various retention and compliance requirements.

What This Means to Organizations

.png?width=450&height=315&name=wrong%20at%20the%20speed%20of%20AI%20(3).png) I believe hallucinations and regulatory scrutiny represent the most likely and pressing AI risks for most organizations. There is quite a bit of overlap between the two, since both ultimately are based on the outcome of an AI process, decision, or generated content. And perhaps hallucinations that lead to bad outcomes is where we see some of the first significant regulatory actions.

I believe hallucinations and regulatory scrutiny represent the most likely and pressing AI risks for most organizations. There is quite a bit of overlap between the two, since both ultimately are based on the outcome of an AI process, decision, or generated content. And perhaps hallucinations that lead to bad outcomes is where we see some of the first significant regulatory actions.

At the very least, organizations should apply their broader compliance and governance programs to AI, analytics, and automation initiatives. The Joint Statement’s focus on Data and Datasets, Model Opacity and Access, and System Design and Use are useful constructs in how to think about AI governance. Organizations need to start capturing and maintaining appropriate records on these, along with decisions or content that are generated via AI.

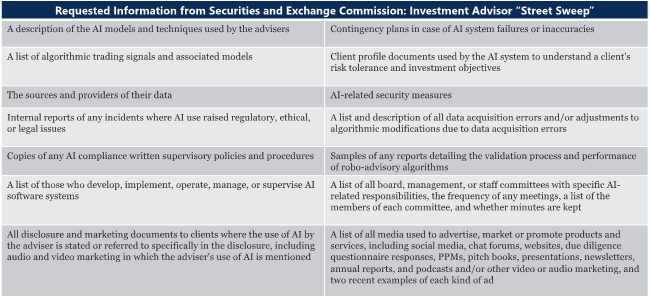

On the latter point, the SEC recently initiated a “Street Sweep” with Investment Advisors’ use of AI, which included significant record requests that are noted below. The requested information aligns with the three main categories from the Joint Statement and provides an even more granular look at potential regulatory scrutiny for other industries

While other industries may not share the same level of regulatory scrutiny as Financial Services, the SEC’s request provides a roadmap of the types of information other regulators may find relevant in an inquiry or make subject to record keeping requirements. Ultimately, organizations will benefit from getting ahead of the curve, before hallucinations or bad outcomes at the speed of AI leaves them vulnerable to regulatory action.

%20(1).png)

Webinar

The New Data Governance, Risk & Compliance Imperative

Watch this webinar to learn:

- The risks associated with inaccessible, poorly structured, and improperly curated data

- A new paradigm for data governance

- Crucial components of an effective data governance program

- What it takes to succeed in the AI future

- How Data GRC technology can deliver value

George Tziahanas, AGC and VP of Compliance at Archive360 has extensive experience working with clients with complex compliance and data risk related challenges. He has worked with many large financial services firms to design and deploy petabyte scale complaint books and records systems, supervision and surveillance, and eDiscovery solutions. George also has significant depth developing strategies and roadmaps addressing compliance and data governance requirements. George has always worked with emerging and advancing technologies; introducing them to address real-world problems. He has worked extensively with AI/ML driven analytics across legal and regulatory use cases, and helps clients adopt these new solutions. George has worked across verticals, with a primary focus on highly regulated enterprises. George holds an M.S. in Molecular Systematics, and a J.D. from DePaul University. He is licensed to practice law in the State of Illinois, and the U.S. District Court for the Norther District of Illinois.